Top 6 technical SEO action items for 2025

Prepare for 2025 with technical updates that optimize website resources, enhance visibility and boost SEO performance.

For many SEO professionals, the approaching of a new year is a good time to conduct a technical SEO audit to assess how well our clients’ or employers’ websites perform in search results.

Below are the key action items to prioritize in 2025 to lay the foundation for a successful year in SEO.

1. Know and reinforce the business’ stance on LLM bots

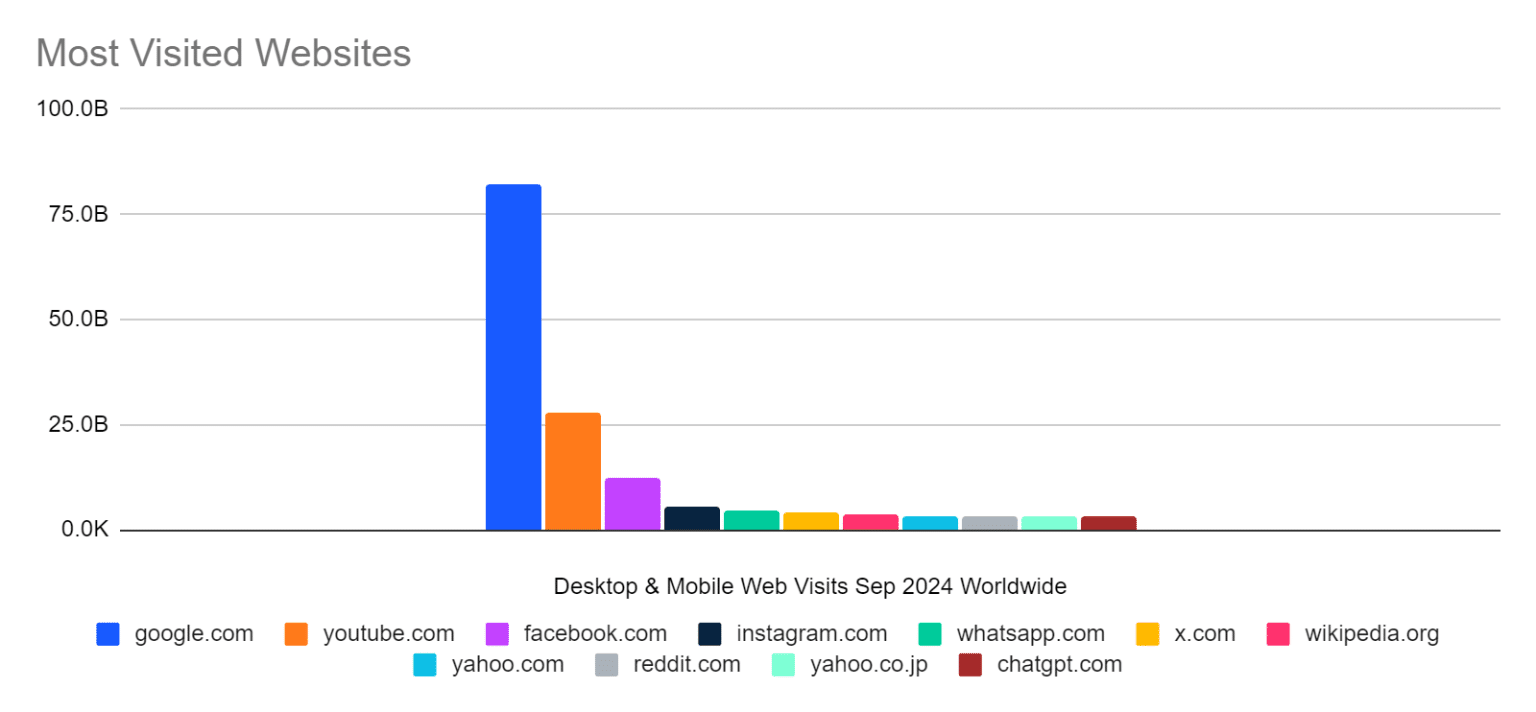

ChatGPT is not significantly impacting Google’s market share. Its monthly visitors in September were estimated at 3.7% of Google’s 82 billion visits. (But remember, those visits aren’t all searches.)

This is a reminder to those who think ChatGPT and large language models (LLMs) will rule the web: They’re far from it at the moment, but that does not mean they don’t matter.

ChatGPT and other LLMs have been around for over two years. By now, CTOs, legal teams, and business leaders should have clear policies on how to use and manage them as internal tools or external search engines. Make sure you understand their stance.

We are in an age where Pandora’s box has been opened.

Even with the many copyright infringement lawsuits, we can’t change the fact that much of the open web has been crawled and ingested into most LLMs.

That’s not a chapter we can easily turn back.

Although I generally don’t favor LLMs, they are an important consideration for future brand and website visibility.

It’s essential to include your website in LLMs like ChatGPT, Perplexity, Claude and others.

A few ways to report on LLM traffic or visibility in AI Overviews include using:

- Regex for GA4 reporting on referrers from different common LLMs.

- Third-party tools like Semrush, Ahrefs and Ziptie that report on AI Overviews visibility.

If, though, your business decides not to include itself in the indices of different LLMs, make sure you’re following the correct documentation from each platform:

- OpenAI.

- Common Crawl.

- Google: Google’s advice is not to block Google-Extended, but to use nosnippet preview controls.

- Perplexity.

- Microsoft Copilot.

Many other LLMs do not publicly share their bot naming data, so in order to block them you would need to do a log file analysis as this Akamai forum user has done with Claudebot. (I can’t find Anthropic documentation confirming this is the name of their bot or their IP range, so proceed with caution.)

Dig deeper: 3 reasons not to block GPTBot from crawling your site

2. Check the structured data of your page templates

Structured data here means much more than schema markup.

Again, with LLMs and de-contextualized snippets in mind (think back to Google’s passage ranking systems), the content on your page should be coded properly and formatted as what they are or what they’re representing.

This means:

- Tables should use <table>, <th> and <tr>.

- Headings should use hierarchical <h> tags from 1-6.

- Links should be <a href> and not <button>.

- HTML rich markup should be used for things like block quotes, asides and videos.

This often takes more effort and discussion than expected, especially if your website relies heavily on JavaScript frameworks, so don’t skip this step.

Try to add structured data to your product pages in feature set lists and schema markup, among other opportunities, to add as close to “pure data” as you can to your website.

3. Work in the margins, find the 1% fixes

We’re sprinting to an inflection point with the sheer number of documents to parse on the web.

We will soon reach a point where the challenge will no longer be ranking but indexing – or even crawling.

Due to my conservatism and wariness, those 1% technical fixes that keep getting pushed back are starting to feel like existential crises.

Each one represents an opportunity for Google to dismiss our website and decide it’s no longer worth crawling or indexing.

So, go back and pull out some of those 1% fixes that have consistently been set aside. The things you said previously weren’t worth the time or effort of your dev team.

It’s coming up to Christmas – if you don’t have a code freeze and aren’t an ecommerce website, things may be quiet anyway.

Look at things like:

- Duplicate og: tags, titles, descriptions or any other meta tag.

- Old plugins that are disabled but not deleted.

- Image sizes.

- Duplicate mobile and desktop navigation.

- Deprecated or duplicate tags in Tag Manager, Segment, etc.

- Duplicate tag manager installations. (Do you still have the code for Google Optimize installed?)

- Deprecated JavaScript on the site.

- Accessibility issues, HTML or CSS compliance issues (as reported by W3C).

- Old pages or post drafts at a server level that should be deleted.

The 1% fixes are specific to your team and website, but these are some common ones. Review them and start adding them to your tickets.

4. Look into new properties

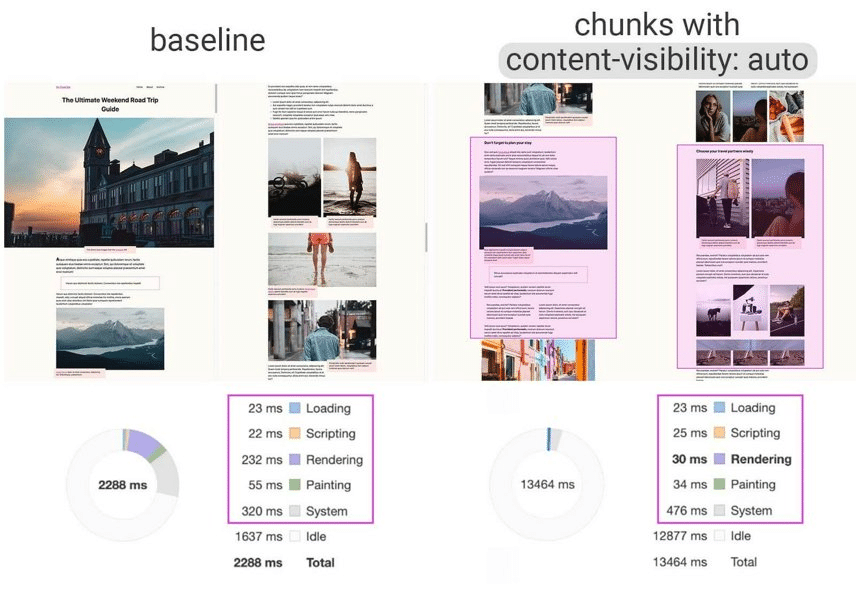

HTML, CSS, and JavaScript (including libraries and frameworks) are always evolving, and Google continuously introduces new ways to make our work more efficient and faster.

Many of these changes will help us as SEOs, including:

- Prerendering: Basically, you can use this resource hint to tell Google the next most likely page a person will visit, which can then be pre-rendered in the background.

- Content visibility: This is essentially lazy loading but for content and images. It can make the initial load time much faster.

Google’s Developer blogs are a good place to start if you’re looking for opportunities to shift the needle at the bleeding edge.

Even the links in your page speed test reports should include information on potential ways to reduce site speed, such as enhancements like chunking or windowing.

These changes may require significant time and effort from your developers, so it’s important to discuss them with your team. Assess the work and build a clear business case for their importance.

If it’s a choice between the 1% fixes and these, I’d personally prioritize addressing the known technical debt first, unless the improvement from the 1% fixes is drastic (over 30%).

5. Sunset any 301 redirects older than a year

Historically, I’ve advocated for keeping your 301 redirects forever, but I’m starting to change my mind.

Why?

When you have a massive list of 301 redirects, the server needs to read each line every time a page loads. This takes time and resources, which are becoming scarcer and more precious.

Google’s stance is to keep 301 redirects for a year. Unless you have strong proof from your own analytics platform that your old URLs are getting significant traffic, preserve the resources you have.

Remove the 301 redirects that are over a year old.

Dig deeper: An SEO’s guide to redirects

6. Consolidate content

I know this isn’t technical SEO, but it’s related to resource management, like sunsetting your year-old 301 redirects.

The more content you have, the heavier your website becomes, with more pages to crawl and index.

If you have similar content from 2013, 2017 and 2022, rather than keeping separate pages for each, consider consolidating them for both crawl management and visibility.

Google doesn’t focus on keywords or years; it’s looking for the most relevant answer to the query.

Group your content to answer questions, not target specific keywords.

Technical SEO is foundational

There is more to consider with technical SEO that isn’t included here because it should be part of our default checks, such as:

- Checking internal links to see if there are hops or if they’re broken.

- Managing site security.

- Implementing 301 redirects and managing 404s.

- Having a consistent site structure.

I’ve shared above what I believe will become more important to technical SEO going forward.

Now is the perfect time to implement the fixes and updates to set the stage for sustained success in 2025.